There are many myths about Electron app performance. Using NAPI-RS, which mixes Rust with Node.js, we've cut it from 800ms to 75ms (single file). Learn how to do that.

A QUICK SUMMARY – FOR THE BUSY ONES

TABLE OF CONTENTS

Electron has a huge advantage: it allows developers to build desktop applications for different systems using the same codebase. That’s for sure.

However, we often hear it is slow, consumes a lot of memory, and spawns multiple processes slowing down the whole system.

Despite that, some very popular applications are built using Electron, including:

Not all of them are perfect, but Visual Studio Code seems the opposite.

Is Electron’s performance so bad then? In fact, in our experience, Electron is quite performant and responsive. It only needs a few adaptations.

In this article, we’ll show you how we reduced bottlenecks in our Electron application and made it fast.

The presented method can be applied to Node.js-based applications like API servers or other tools requiring high performance.

We’ll take a look at an Electron-based game launcher.

If you play games, you probably have a few of them installed. Most launchers download game files, install updates, and verify files so games can launch without any problems.

There are parts we can’t speed up that are dependent on, e.g., connection speed, but when it comes to verifying downloaded or patched files, it’s a different story. And if the game is big, it can take an impressive amount of time for the whole process.

This is our case.

The app we're going to analyze is responsible for downloading files and, if eligible, applying binary patches. When this is done, we must ensure that nothing gets corrupted. It doesn't matter what causes the corruption, our users want to play the game, and we have to make it possible.

Now, let me give you some numbers. Our games consist of 44 files of a total size of around ~4.7GB.

We must verify them all after downloading the game or an update. We used https://www.npmjs.com/package/crc to calculate the CRC of each file and verify it against the manifest file. Let’s see how performant this approach is, time for some benchmarks.

All benchmarks are run on a 2021 MacBook Pro 14’ M1 Pro.

First, we need some files to verify. We can create a few using the command

mkfile -n 200m test_200m_1

But if we look at the content, we will see it’s all zeros!

This might give us skewed results. Instead, we will use this command:

dd if=/dev/urandom of=test_200m_1 bs=1M count=200

Let's create 10 files, 200MB each, and because the data in them is random, they should have different checksums.

The benchmark code:

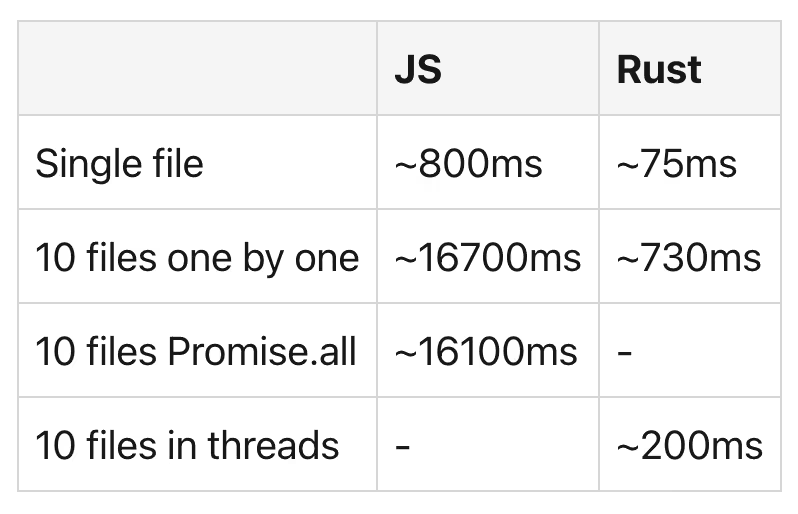

It takes around 800ms to create the read stream and calculate the checksum incrementally. We prefer streams because we can’t afford to load big files into system memory. If we calculate CRC32 for all files one by one, the result is ~16700ms. It slows down after the 3rd file.

Is it any better if we use Promise.all to run them concurrently? Well… this is at the limit of measurement error. It varies at around ~16100ms.

So, here are our results so far:

There are many paths you can take when optimizing an Electron app, but we are primarly interested in:

Worker Thread requires some boilerplate code around it. Also, it might be problematic if your code base is in TypeScript. It’s doable but requires additional tools like ts-node or configuration. We don’t want to spawn who knows how many worker threads – this would be inefficient too. The performance problem is somewhere else. It will be slow wherever we put this calculation.

Conclusion: spawning worker threads will slow down our app even more, so NodeJS Worker Threads is not for us.

If we want it fast, Node-API looks like a perfect solution. A library written in C/C++ must be fast. If you prefer to use C++ over C, the node-addon-api can help. This is probably one of the best solutions available, especially since it is officially supported by the Node.js team. It’s super stable once it is built, but it can be painful during development. Errors are often far from easy to understand, so if you are no expert in C, it might kick your ass very easily.

Conclusion: we don’t have C skills to fix the errors, so Node-API is not for us.

Now it is getting interesting: Neon Bindings. Rust in Node.js sounds amazing, another buzzword, but is it only a buzzword? Neon says it is being used by popular apps like 1Password and Signal https://neon-bindings.com/docs/example-projects, but let’s take a look at the other Rust-based option, which is NAPI-RS.

Conclusion: Neon Bindings looks promising, but let’s see how it compares to our last option.

If we look at the documentation, NAPI-RS’s docs look much better than Neon’s. The framework is sponsored by some big names in the industry. The extensive documentation and support of big brands are sufficient reasons for us to go with NAPI-RS rather than Neon Bindings.

Conclusion: NAPI-RS provides better documentation than comparable Neon Bindings and therefore makes a safer choice.

To optimize our Electron app, we’ll use NAPI-RS, which mixes Rust with Node.js.

Rust is an attractive addition to Node.js because of its performance, memory safety, community, and tools (cargo, rust-analyzer). No wonder it’s one of the most liked languages and more and more companies are rewriting their modules to Rust.

With NAPI-RS, we need to build a library that includes https://crates.io/crates/crc32fast to calculate CRC32 extremely fast. NAPI-RS gives us great ready-to-go workflows to build NPM packages, so building it and integrating it with the project is a breeze. Prebuilts are supported, too, so you don’t need the Rust environment at all to use it, the correct build will be downloaded and used. No matter if you use Windows, Linux, or MacOS (Apple M1 machines are on the list too.)

With the crc32fast library, we will use the Hasher instance to update the checksum from the read stream, as in JS implementation:

It might sound like a fake or invalid result but it’s just 75ms for a single file! It’s ten times faster than the JS implementation. When we process all files one by one, it’s around 730ms, so it also scales much better.

But that’s not all. There is one more quite simple optimization we can make. Instead of calling the native library N times (where N is the number of files), we can make it accept an array of paths and spawn a thread for each file.

Remember: Rust doesn't have a limit on the number of threads, as these are OS threads managed by the system. It depends on the system, so if you know how many threads will be spawned and it’s not very high, you should be safe. Otherwise, we would recommend putting a limit and processing files or doing the computation in chunks.

Let’s put our calculation in a thread per single file and return all checksum at once:

How long does it take to call the native function with an array of paths and do all the calculations?

Only 150ms, yes, it is THAT quick. To be 100% sure, we restarted our MacBook and did two additional tests.

Let’s bring all the results together and see how they compare.

It’s worth noting that calling the native function with an empty array takes 124584 nanoseconds which is 0.12ms so the overhead is very small.

As mentioned in the beginning, all of this applies to Web APIs, CLI tools, and Electron. Basically, to everything where Node.js is used.

But with Electron, there is one more thing to remember. Electron bundles the app into an archive called app.asar. Some Node modules must be unpacked in order to be loaded by the runtime. Most bundlers like Electron Builder or Forge automatically keep those modules outside the archive file, but it might happen that our library will stay in the Asar file. If so, you should specify what libraries should remain unpacked. It’s not mandatory but will reduce the overhead of unpacking and loading these .node files.

As you can see, there are multiple ways of speeding up parts of your Electron application, especially when it comes to doing heavy computations. Luckily, developers can choose from different languages and strategies to cover a wide spectrum of use cases.

In our app, verifying files is only part of the whole launcher process. The slowest part for most players is downloading the files, but this cannot be optimized beyond what your internet service provider offers. Also, some players have older machines with HDD disks where IO might be the bottleneck and not the CPU.

But if there is something we can improve and make more performant at reasonable costs, we should strive for it. If there are any functions or modules in your application that can be rewritten in either Rust or C, why not try experimenting? Such optimizations could significantly improve your app’s overall performance.

Our promise

Every year, Brainhub helps founders, leaders and software engineers make smart tech decisions. We earn that trust by openly sharing our insights based on practical software engineering experience.

Authors

Read next

Popular this month