Learn how we seeked a way to efficiently extract key information from PDF pitchdecks, including dealing with the challenges posed by graphical elements and unstructured content.

A QUICK SUMMARY – FOR THE BUSY ONES

The company approached us with the issue of a large quantity of data to sift through in the form of pitchdecks. We were faced with the task of automating the extraction of the most important information from unstructured hard-to-parse format - PDF.

Getting the text contents of the PDF was just the beginning. The text in PDF is all over the place: we had slides with two or three words, some tables, lists, or just paragraphs squished between images.

Read the whole article to learn more about our findings.

TABLE OF CONTENTS

The company approached us with the issue of a large quantity of data to sift through in the form of pitchdecks. While each pitchdeck is generally fairly short, in most cases around 10 slides each, the issue is the number of them to analyze. We were faced with the task of automating the extraction of the most important information from unstructured hard-to-parse format - PDF. Additionally, the data is in the form of slides: with a lot of graphical cues and geometric relations between words that convey information not easily inferred from the text itself. To make it easier to analyze a large amount of data, we would need a solution that would automate as much of that process as possible: from reading the document itself, to finding interesting pieces of information like names of people involved, financial data, and so on.

The first issue we faced was getting the text contents from a PDF file. While extracting text directly from PDF, using open source tools like pdf-parse (which is used internally by langchain’s pdf-loader) did the job most of the time, we still had some issues with it: some PDFs were not parsed correctly and the tool returned empty string (like in the case of Uber sample pitchdeck ), we’ve just got some words split into individual characters and so on.

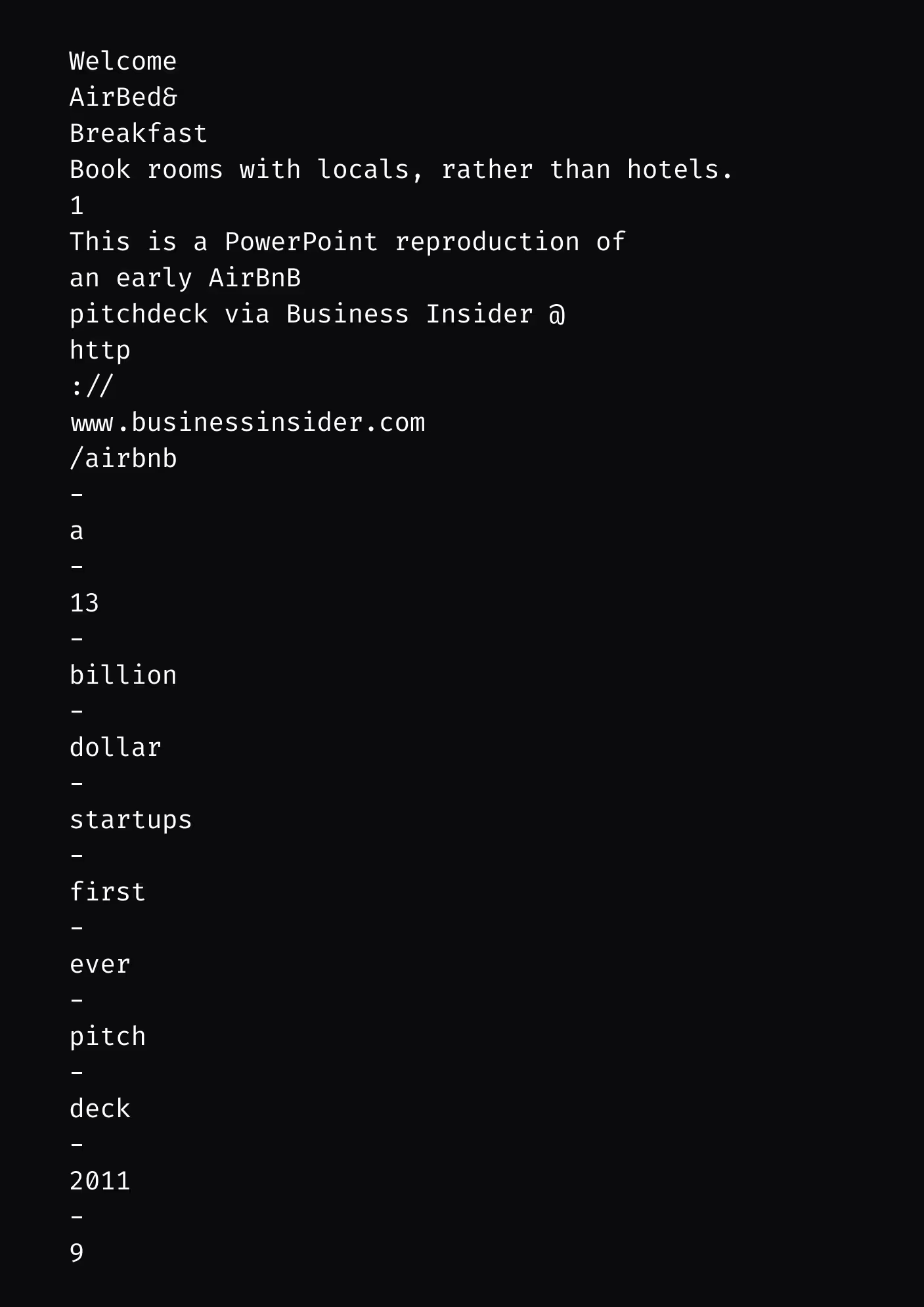

Unfortunately, getting the text contents of the PDF was just the beginning. The text in PDF is all over the place: we had slides with two or three words, some tables, lists, or just paragraphs squished between images. Below is the example of text extracted from page 2 of the example reproduction of AirBnB early pitchdeck (link, extraction done with pdf-parse library):

And this is one of the better ones!

While parsing text like this is hard in itself, we also would like to be able to modify what extract from the text. We may want to know what people are involved in a business. Or do we just want to get all financial data, or maybe just the name of the industry? Each type of data extracted requires a different approach to parsing and validating text, and then a lot of testing.

First, we’ve decided to leave open-source solutions behind. We’ve used AWS Textract to parse PDF files. This way we don’t rely on the internal structure of the PDF to get text from it (or to get nothing - like in the case of the Uber example). Textract uses OCR and machine learning to get not only text but also spatial information from the document.

Here is the Textract result (with all geometric information stripped) from the same page of the AirBnB pitchdeck reproduction

But that’s not all! Textract responds with a list of Blocks (like “Page”, or “Line” for a line of text), together with their position and relationships which we can use to understand the structure of the document better

Most of the time, we don’t need such details, so in our case, we use only a fraction of them.

Now to actually parse the text and pull what we want from it. For that, the only solution that seemed viable was to use a language model. While we tested some open-source solutions, they were not up to the task. Hallucinations were too common, and responses too unpredictable. Additionally, most capable Open Source models available today are not licensed to be used commercially. So we went with the OpenAI GPT-3.5 and GPT-4 models.

We’ve decided to first let the model summarise the text and include all information from the pitchdeck in that summary. That way we have text that is complete (not just the outline) and has a structure that is easier to work with. We’ve used the following prompt for each page of the document:

With additional instructions like “avoid adding your own opinions or assumptions” we minimize the hallucinations (models like to add fake data to the summary. GPT-3 even added a completely fake financial analysis!). When we have a summary of all pages we can ask the model to extract information from it. Here is an example of the prompt we’ve used to get the list of people referenced in the document:

The summarisation returned by the models (both GPT3 and 4) is of good quality: the information returned is factual and whatever is plainly stated in the document will end up in the summary as well.

However, the extracting of the list of people is a different story. Models, especially GPT-3, often answer with a list similar to this (not an actual response):

Not only this is clearly not a correct list of people, but also, the email was not in the source text at all, the model made it up!

We’ve also experimented with many variations of that prompt like:

What we miss and what is probably the most difficult is the ability to interpret the images and spatial relationships in PDF slides. While AWS Textract returns some spatial information it does not recognize images, and the data returned is hard to pass to the model. We’re still investigating how to make the model understand arrows, charts, and tables. Additionally, we would like to automate the process of online research e.g. find more information about companies mentioned in the documents using available APIs (like Crunchbase) or fetch more data on the people involved.

The case study addresses automating the extraction of vital details from numerous PDF pitchdecks. These decks are concise but numerous, making manual analysis impractical. The challenge involves extracting text and interpreting graphical elements. AWS Textract was employed for text extraction due to its OCR and layout understanding capabilities. OpenAI's GPT-3.5 and GPT-4 models were used to summarize and extract information, yet challenges arose in accurately extracting specific data like people's names or financial data. The study acknowledges the need to enhance image interpretation to understand visual elements better.

Our promise

Every year, Brainhub helps founders, leaders and software engineers make smart tech decisions. We earn that trust by openly sharing our insights based on practical software engineering experience.

Authors

Read next

Popular this month